The Evolution of Low-Latency Video Streaming

Tech Talk

In the past few years, the video streaming industry has seen immense interest in low-latency video streaming protocols. The majority of interest is on delivering videos with a sub-five-second delay, making them comparable with delays in live broadcast TV systems. Attaining such low delay is critical for streaming live sports, gaming, online learning, interactive video applications, and others.

Developing the Technology for Low-Latency Streaming

The delays in conventional live OTT streaming technologies such as HLS and DASH are much longer. They’re caused by relatively long segments (4-10 seconds) and a segment-based delivery model, requiring complete delivery of each media segment before playback. Combined with the buffering strategies used by the HLS or DASH streaming clients, this typically produces delays of 10-30 seconds, or even longer.

To combat delays, recent evolutions of HLS and DASH standards, known as Low-Latency HLS (LL-HLS) and Low-Latency DASH (LL-DASH), have introduced two key tools:

- Chunked video encoding. This is an encoding strategy that produces video segments structured as sequences of much shorter sub-segments or chunks.

- Chunked segment transfer. This is an HTTP transfer mode that enables transmission of shorter video chunks to streaming clients as soon as they are generated.

A streaming client can join a low-latency live stream from any Stream Access Point (SAP) which is made available by the live transcoder on segment boundaries or chunk boundaries. Once joined, a player would only need to buffer the latest video chunk generated by the encoder before decoding and rendering it. Considering that each segment can be split into several chunks (typically 4-10), this reduces the delay significantly.

Several open-source and proprietary implementations of low-latency servers and players are currently available on the market, including one by Brightcove. Many of them have demonstrated lower streaming delay when only a single-bitrate stream is used and when they stream over high-speed network connections. However, their performance under more complex and realistic deployment environments has not been well studied.

Testing the Performance of Low-Latency Streaming

In our paper published in ACM Mile-High-Video 2023 (link forthcoming) as well as a presentation at Facebook@Scale, we reported some results after testing the performance of low-latency video systems.

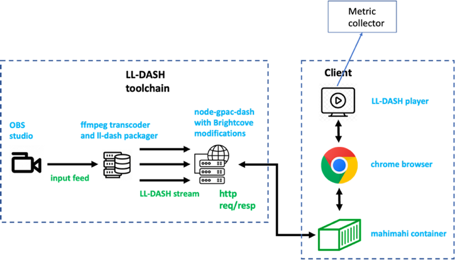

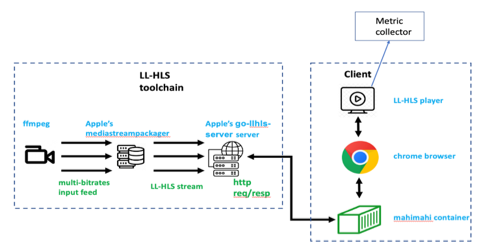

First, we developed an evaluation testbed for both LL-HLS and LL-DASH systems. We then evaluated various low-latency players using this testbed, including Dash.js, HLS.js, Shaka player, and Theo player. We also evaluated several of the latest bitrate adaptation algorithms with optimizations for low-latency live players. The evaluation was based on a series of live streaming experiments. These experiments were repeated using identical video content, encoding profiles, and network conditions (emulated by using traces of real-world networks).

Table 1 shows our live video encoding profiles for generating the multi-bitrates, low-latency DASH/HLS stream.

| Rendition | Video resolution (pixels) | Video codec and profile | Bitrate (kbps) |

|---|---|---|---|

| low | 768 x 432 | H.264 main | 949 |

| mid | 1024 x 576 | H.264 main | 1854 |

| high | 1600 x 900 | H.264 main | 3624 |

| top | 1920 x 1080 | H.264 main | 5166 |

Figure 1 shows the workflows of our low-latency DASH and HLS streaming testbeds.

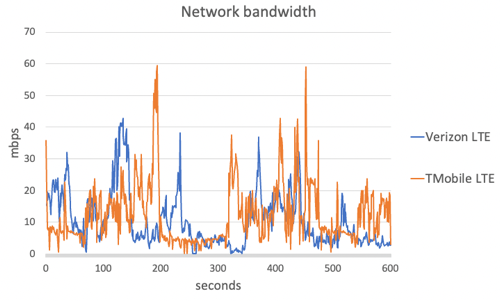

Figure 2 shows the available network bandwidth of our emulated LTE mobile network. The download bandwidth of the low-latency players is controlled by our network emulation tool and the network traces.

A variety of system performance metrics (average stream bitrate, amount of downloaded media data, streaming latency) as well as buffering and stream switching statistics were captured and reported in our experiments.

These results have been subsequently used to describe the observed differences in the performance of LL-HLS and LL-DASH players and systems.

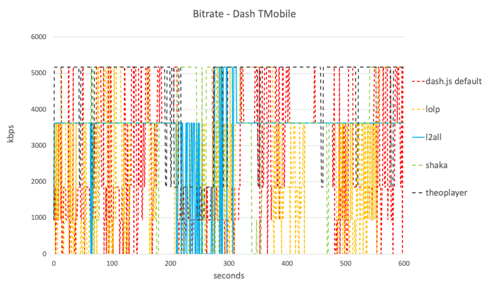

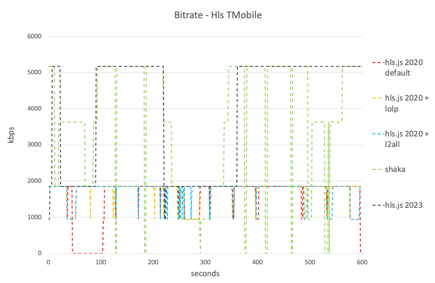

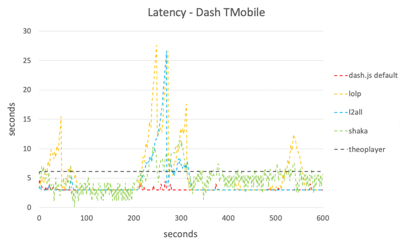

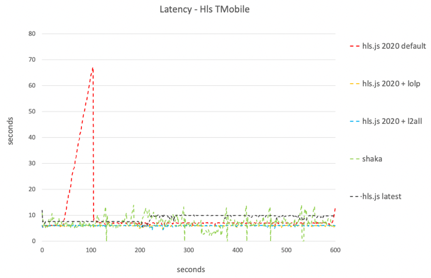

A few plots from our study can be seen in the figures below.

Performance statistics – T-Mobile LTE network

| Player/Algorithm | Avg. bitrate [kbps] | Avg. height [pixels] | Avg. latency [secs] | Latency var. [secs] | Speed var. [%] | Number of switches | Buffer events | Buffer ratio [%] | MBs loaded | Objects loaded |

|---|---|---|---|---|---|---|---|---|---|---|

| DASH.js default | 2770 | 726 | 3.06 | 0.21 | 10.4 | 93 | 38 | 7.99 | 352.2 | 256 |

| DASH.js LolP | 3496 | 853 | 5.65 | 4.59 | 22.7 | 70 | 53 | 21.96 | 369.4 | 210 |

| DASH.js L2all | 3699 | 908 | 4.14 | 3.18 | 19.9 | 5 | 19 | 7.99 | 368 | 147 |

| Shaka player (dash) | 3818 | 916 | 4.92 | 2.06 | 0 | 16 | 5 | 4.66 | 360.3 | 155 |

| THEO player (dash) | 4594 | 993 | 6.16 | 0.01 | 0 | 27 | 0 | 0 | 418.7 | 152 |

| HLS.js default 2020 | 1763 | 562 | 10.08 | 10.91 | 8.1 | 26 | 2 | 9.8 | 130.7 | 589 |

| HLS.js LolP 2020 | 1756 | 560 | 5.97 | 0.2 | 6.1 | 24 | 0 | 0 | 148.1 | 688 |

| HLS.js L2all 2020 | 1752 | 560 | 6 | 0.23 | 5.9 | 34 | 0 | 0 | 133.1 | 686 |

| HLS.js default 2023 | 3971 | 895 | 8.93 | 1.13 | 0 | 8 | 0 | 0 | 360.8 | 613 |

| Shaka player (HLS) | 3955 | 908 | 7.18 | 2.23 | 0 | 14 | 7 | 3.8 | 230 | 475 |

Evaluating the Performance of Low-Latency Streaming

While LL-HLS and LL-DASH worked well in unconstrained network environments, they struggled in low bandwidth or highly variable networks (which are typical in mobile deployments). Observed effects included highly variable delays, inability to prevent buffering, and frequent bandwidth switches or inability to use the available network bandwidth. Some players simply switched into non-low-latency streaming under such challenging network conditions.

As promising as LL-HLS or LL-DASH are in theory, there is still some room for these technologies to mature.

At Brightcove, we are continuing to work on best-in-class implementations of algorithms for low-latency streaming clients as well as encoder and server-side optimizations. We intend to make our support for low-latency streaming highly scalable, reliable, and fully ready for prime time.

This blog was originally written by Yuriy Reznik in 2021 and has been updated for accuracy and comprehensiveness.