How Brightcove Uses Fastly to Decrease Time to First Byte

Tech Talk

As the starting point for almost every video playback session, the Playback API is one of the most heavily-trafficked Brightcove services. It's also one of the oldest, having powered Brightcove's video streams for around a decade.

In this blog post we'll look at how we completely rewrote, rearchitected, and transparently swapped out the service to bring it in line with the high levels of availability and scalability that users have come to expect from Brightcove.

WHAT IS THE PLAYBACK API?

When a user loads a webpage that contains a Brightcove player, the first call it will make is to the Playback API to retrieve all of the information it needs to begin downloading and playing back video.

The URL the player calls looks like the following, with variations to allow pre-defined or search-based playlists of multiple videos:

In return, it will receive a large block of JSON that contains metadata about the video and URLs for the video manifest files.

SECURITY

Security around this API is provided by PolicyKeys, which are encoded JSON blobs that can contain an account ID, geo allow and deny lists, and a list of domain names to ensure that a given video can only be played back under certain circumstances. Without one of these keys, playback will always be rejected.

These restrictions can also be stored in Brightcove's CMS at both a per-account and per-video level.

WHY CHANGE IT?

The first incarnation of the Playback API served us well for a long time, but it had begun to show its age when compared to the rest of the Dynamic Delivery services that make up our video playback flow. In particular, it was:

- Hosted in a single geographic region within the United States. This meant that playback would fail for users globally if there were problems in this region. It also added a lot of latency to video startup times when being called from locales like Australasia or Japan.

- Difficult to cache for and slow to reflect updates. Although it was partially fronted by a content delivery network (CDN), we could only cache for a short amount of time to ensure that users wouldn't have to wait too long to see changes to the video metadata or manifests.

- Written in a different language (Clojure) than the rest of the Dynamic Delivery services (Go).

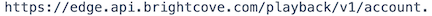

- Difficult to scale, particularly for videos that used geoblocking or IP whitelisting. Because we used a third-party service to look up where a particular playback request was coming from and match it against the video's restrictions, we had to bypass the CDN whenever these restrictions were being used:

When delivering video, we rely heavily on CDNs to cache as much content as possible to allow viewers to download video from someplace geographically close for the smoothest streaming experience. Since the Playback API request sits between the viewer and their chosen video beginning to play, whenever this content isn't cached, we're increasing their wait time along with the chance that they'll leave to view something else.

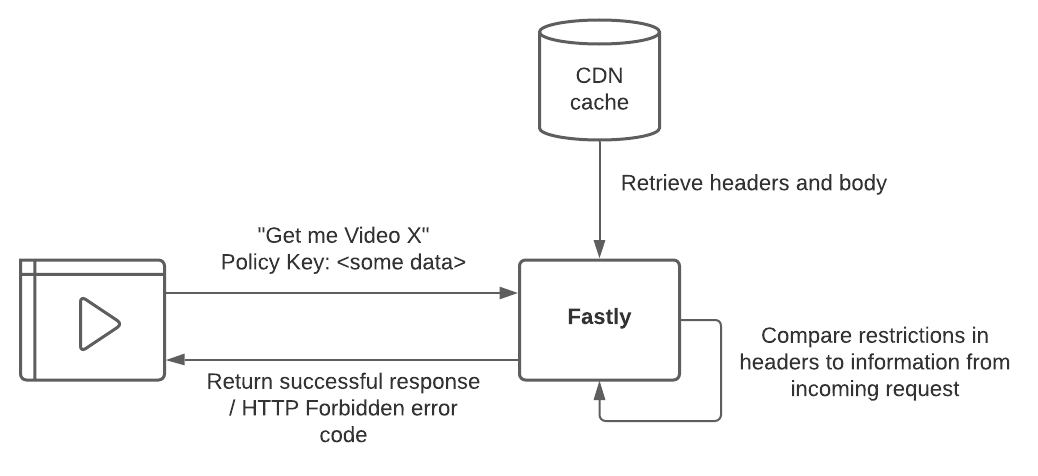

While CDN caching is relatively straightforward for chunks of video or audio, it becomes more complex when trying to cache the response of an API that can potentially allow or deny a request (e.g., "Get me information about Video X") based on a combination of viewer location, IP, the website the video is being viewed from, and whether or not they're using a proxy or VPN.

In addition, we have to make sure we're combining the client-side Policy Key and the server-side account configuration and video metadata to apply these restrictions correctly.

THE VISION - CACHE EVERYTHING ALL THE TIME

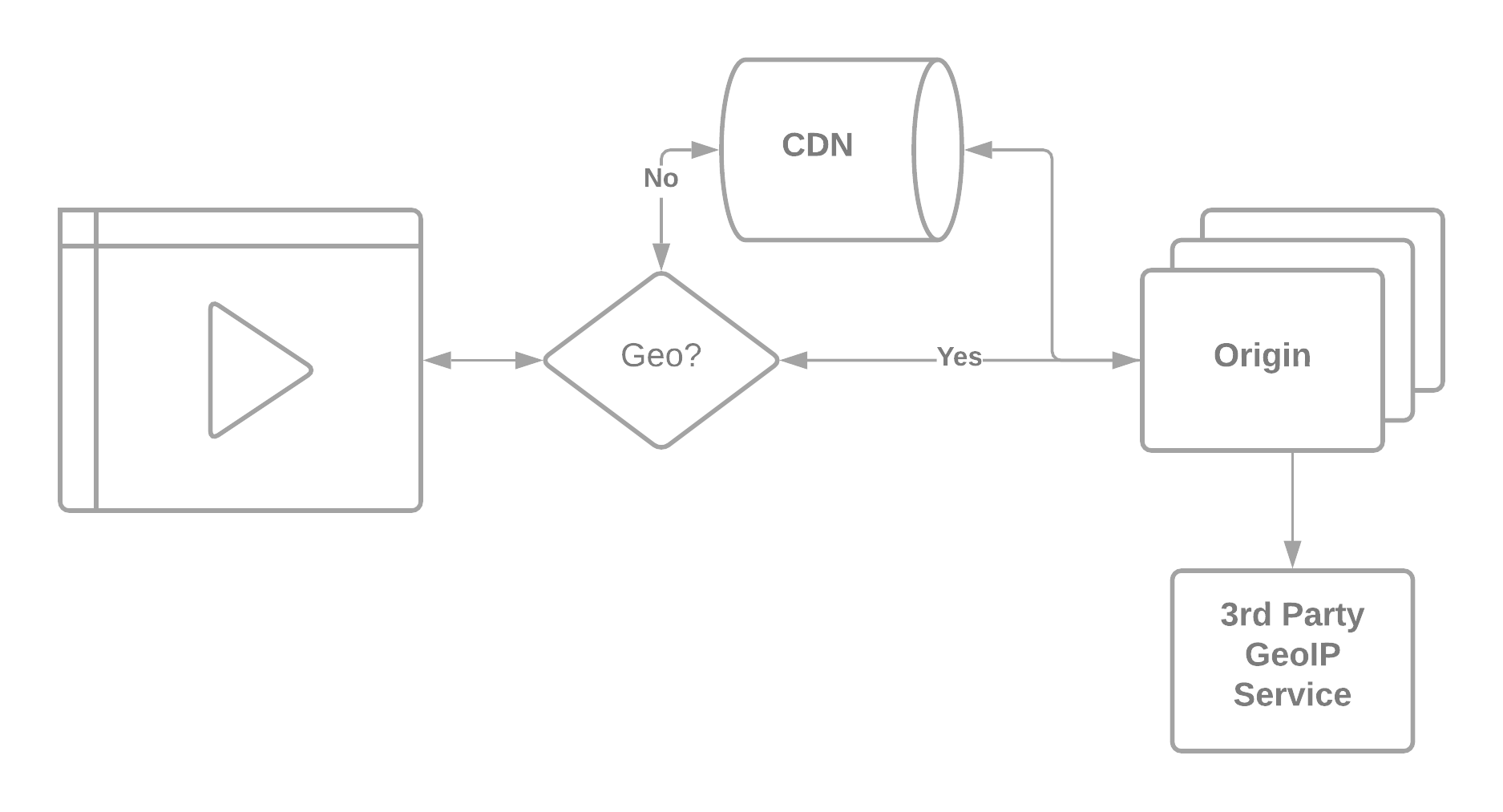

We use Fastly as one of our CDNs within Dynamic Delivery, particularly when their powerful VCL language lets us perform advanced logic without having to make a request back to the origin.

With the addition of a set of geolocation variables on every request, we came up with a design that would allow us to cache Playback API responses at the CDN indefinitely and perform all of the logic around access to the response within Fastly.

We did this by having the origin return all of the data needed to make georestriction decisions to the CDN, along with the response body:

First, the CDN checks if the requested video is already in cache. If not, it calls the Playback API to retrieve it.

First, the CDN checks if the requested video is already in cache. If not, it calls the Playback API to retrieve it.

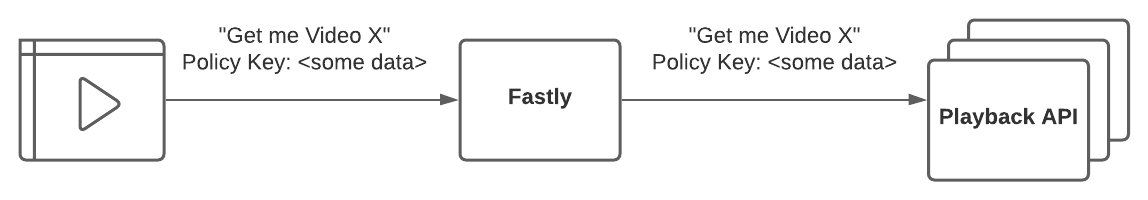

The Playback API then calls the CMS API to retrieve all of the information about the video, plus any viewing restrictions that might be stored at a per-video or per-account level.

The Playback API then calls the CMS API to retrieve all of the information about the video, plus any viewing restrictions that might be stored at a per-video or per-account level.

The Playback API decodes the Policy Key and overlays any restrictions from it on top of the ones it discovered from the CMS API, then returns these as HTTP headers along with the standard response body.

The Playback API decodes the Policy Key and overlays any restrictions from it on top of the ones it discovered from the CMS API, then returns these as HTTP headers along with the standard response body.

Fastly will now return or deny the response to the viewer who just requested it and store the response and its headers in cache, so the next time a request for this video comes in, we can do all that logic within Fastly and avoid a trip to the origin.

Fastly will now return or deny the response to the viewer who just requested it and store the response and its headers in cache, so the next time a request for this video comes in, we can do all that logic within Fastly and avoid a trip to the origin.

CACHE INVALIDATION - AN EASY PROBLEM

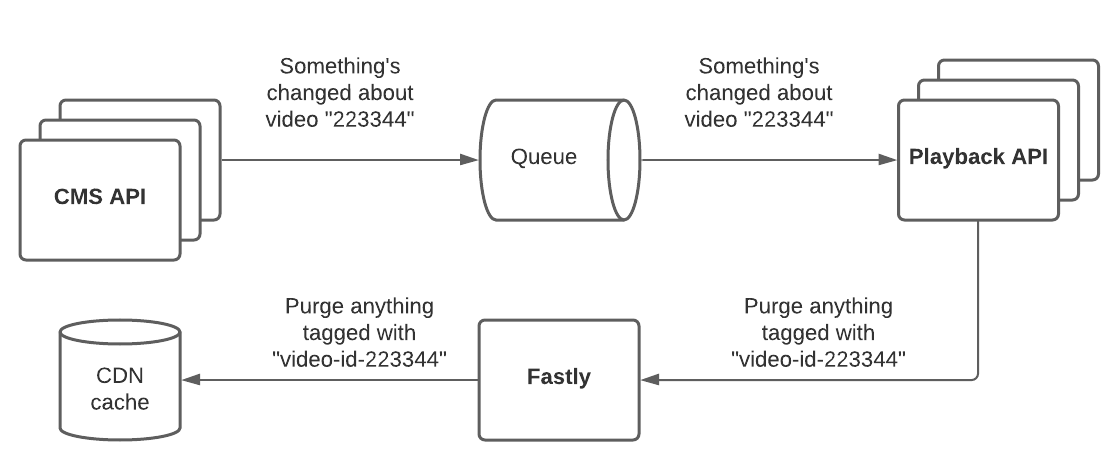

Now that we were caching indefinitely at the CDN, the next problem was how to purge that cache when necessary – for example, when something changes with the video (its description edited, DRM enabled, geo-restriction altered, etc.) or on the account that owns the video (e.g., new IP added to the IP whitelist).

The naive approach that the previous iteration of the system took was to set a header that told the CDN to fetch a fresh copy after 20 minutes, meaning that that was the maximum time users would have to wait to see their changes reflected.

With our new architecture and Fastly's Surrogate Keys, we improved on this to give us the best of both worlds: indefinite caching and almost instant updates when something changes.

The first step was to begin using these Surrogate Keys. The Playback API returns its responses with the Surrogate Key header set, which instructs Fastly to tag the CDN cache entry with whatever values it contains – for example, Surrogate-Key: video-id-132413 account-id-938456. This will now allow us to automate the purging of either a specific video ID or all videos for a particular account ID with a single Fastly API call.

Next, we subscribed the Playback API to a change feed that the CMS API emits, which lets us know any time something changes with a video or account:

Once this purge happens, the next request that comes in will receive a fresh copy from the Playback API that contains whatever changes were made.

TL;DR – DID IT WORK?

Yes!

The new Playback API has let us overcome the limitations of the old system – it's written in Go, containerised and deployed in multiple global regions, and autoscales on demand.

With the new CDN architecture, all Playback API requests are now cacheable, and we're seeing the high cache hit rates translating into vastly improved "time to first byte" for viewers, particularly in Asia and Australasia, with one customer sharing player data showing response times down from an average of 300ms to below 50ms. Which is great - because everybody is happier when videos start to play fastly.